What is Mistral 8x22B?

Mistral 8x22B is a new powerful language model developed by Mistral AI. It uses a special architecture known as mixture-of-experts (MoE), which makes it much faster and more effective than older, traditional models. As it is open-source, Mistral 8x22B has become a very popular LLM in the AI community.

Mistral 8x22B extends the achievements of its earlier model, Mistral 8x7B. It consists of 8 expert networks, each with 22 billion parameters, for a total of 176 billion parameters. Interestingly, only a portion of these nodes are used at any given time, which means it effectively utilizes about 44 billion parameters.

Mistral Performance and Benchmarks

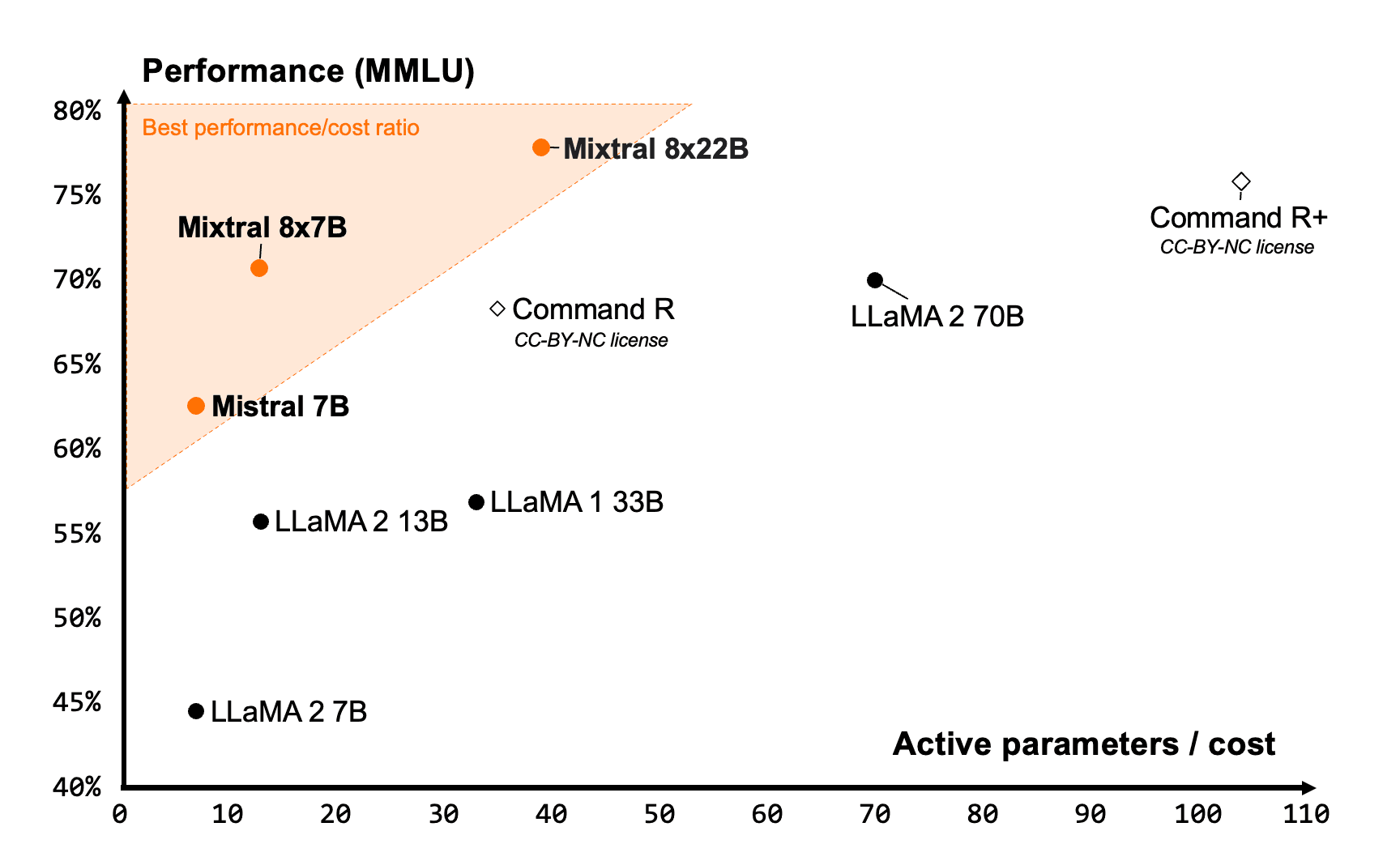

Mistral 8x22B performs exceptionally well on various natural language processing tasks, outperforming models like GPT-3.5 and Llama 2 70B in many evaluations. In the MT-Bench benchmark, which tests a model’s ability across a range of tasks, Mistral 8x22B scores an impressive 8.5, beating GPT-3.5’s 7.8. It's great in understanding, generating, and working with multiple languages and quickly learns new tasks with just a small amount of new data. This adaptability makes it an excellent tool for diverse applications.

Mistral AI trained this model using a vast dataset from various languages and fields, incorporating sophisticated training strategies to enhance both the model's effectiveness and efficiency.

Installing Mistral 8x22B Locally with Ollama

To run Mistral 8x22B locally, use Ollama, a user-friendly framework that simplifies working with large models like Mistral 8x22B. Here’s a quick guide:

1. Install Ollama tool:

pip install ollama2. Download the Mistral 8x22B model:

ollama pull mixtral:8x22b3. Activate the model:

ollama run mixtral:8x22bBy default, Ollama runs the instruct version of Mistral 8x22B. To use the basic version instead of the instruct version, specify:

ollama run mixtral:8x22b-text-v0.1-q4_1Using Ollama, you can easily interact with the model by typing prompts and receiving AI-generated text directly on the command line, making your experience with Mistral AI seamless and straightforward.

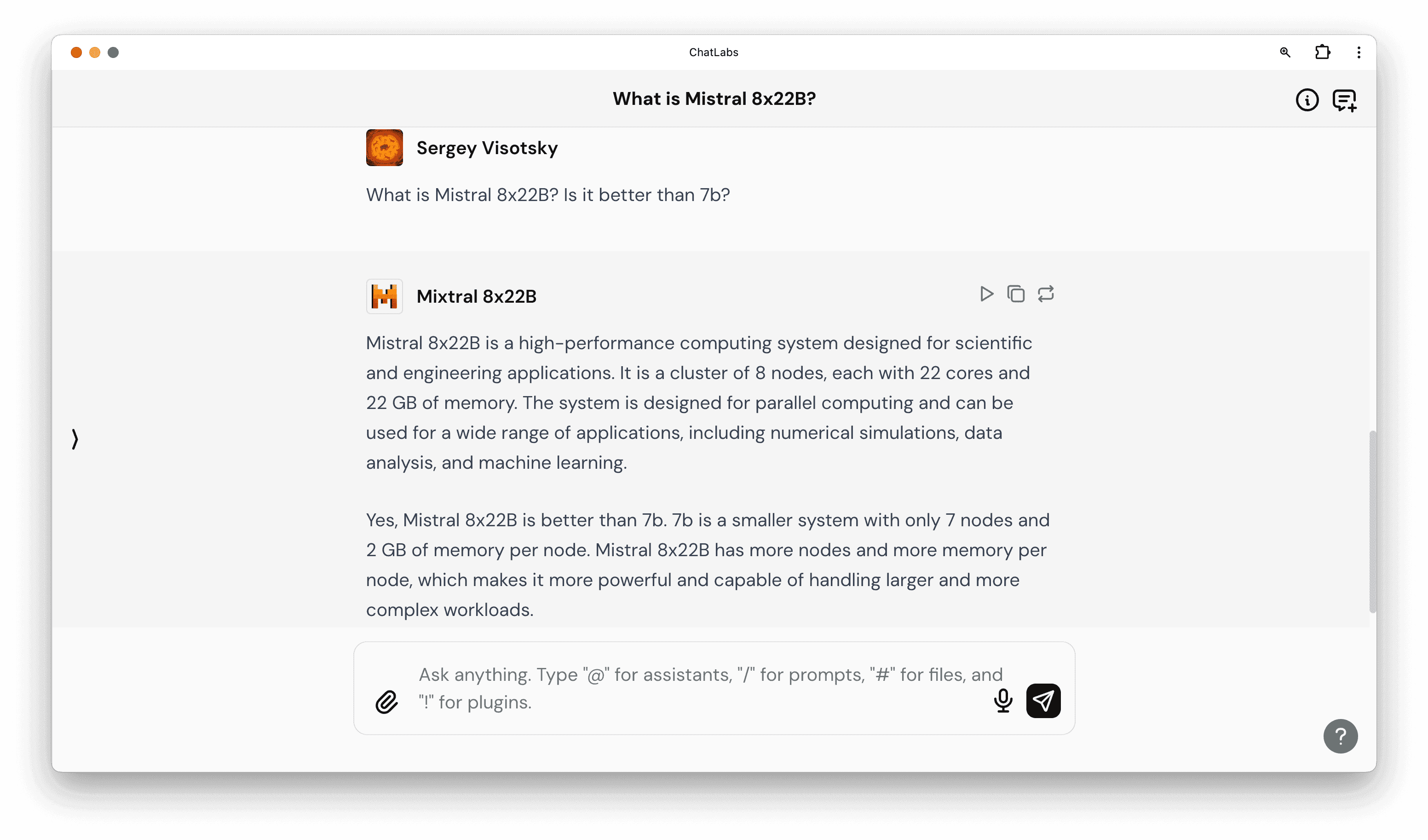

Running Mistral 8x22B Online with Writingmate AI

Writingmate AI lets you work with various language models like Mistral 8x22B easily, without installing anything or using an API.

Here’s how to use Mistral 8x22B on Writingmate AI:

Navigate to Writingmate: Go to

https://writingmate.aiand log in.Get a Pro Subscription: You need a Pro subscription to activate the web browsing feature of Mistral 8x22B.

Pick the Model: Select the Mistral 8x22B model from the model menu in the top-right corner.

Put a Query: Once Mistral 8x22B is chosen, start texting your queries.

Writingmate is a new great platform that helps businesses and developers use artificial intelligence to automate tasks. It provides access to over 30 popular models, including GPT4, Mistral, Gemini 1.5 Pro, Claude Opus, and others.

Thanks to its easy-to-use interface and broad feature set, Writingmate AI makes it simple to add AI capabilities to different applications and processes.Writingmate is perfect for tasks like automating customer support, improving business processes, or analyzing data. It has all the tools and functions you need to use AI effectively: Prompt Library, AI assistants, capability to search the Web and create AI images. The platform is designed to be user-friendly, with clear documentation that helps both experts and novices alike, making it easier for all types of organizations to adopt AI.

Using Mistral 8x22B via API

You can access Mistral 8x22B through several API providers. This lets developers add the model to their apps without needing to run it locally. Here’s a look at some providers and their costs:

Pricing: $0.0015 per 1,000 tokens

Endpoint:

https://api.mistral.ai/v1/engines/mixtral-8x22b/completions

Pricing: $0.65 per 1 million input tokens, $0.65 per 1 million output tokens

Endpoint:

https://api.openrouter.ai/v1/engines/mixtral-8x22b/completions

Pricing: Contact for custom pricing

Endpoint:

https://api.deepinfra.com/v1/engines/mixtral-8x22b/completions

To use Mistral 8x22B via API, sign up with the provider, get an API key, and send HTTP requests to the endpoint they provide. Each provider gives you documentation and code samples to help with setup.

Conclusion

The launch of Mistral 8x77B by Mistral AI is a big step forward in the field of language models and AI. With its top-notch performance and ease of use, Mistral 8x77B is set to make a big impact across various sectors and applications.

Written by

Artem Vysotsky

Ex-Staff Engineer at Meta. Building the technical foundation to make AI accessible to everyone.

Reviewed by

Sergey Vysotsky

Ex-Chief Editor / PM at Mosaic. Passionate about making AI accessible and affordable for everyone.