When Deepseek R1 had entered the market, many enthusiasts and professionals started to consider it a budget-friendly AI model with reasoning, and yet, when many of them started using OpenAI o3 Mini and o3 Mini high, things became much less obvious. My name is Artem and for a couple of years I am constantly testing and comparing AI models on a variety of tasks. Today we will see how those two models compare.

To start, here is a quick feature comparison of o3 mini vs deepseek r1 models. This table highlights some key differences. Both of the models were developed and trained for reasoning and are, in this way, advanced reasoning models. I will give my quick opinion after this spreadsheet and then will go into more detail.

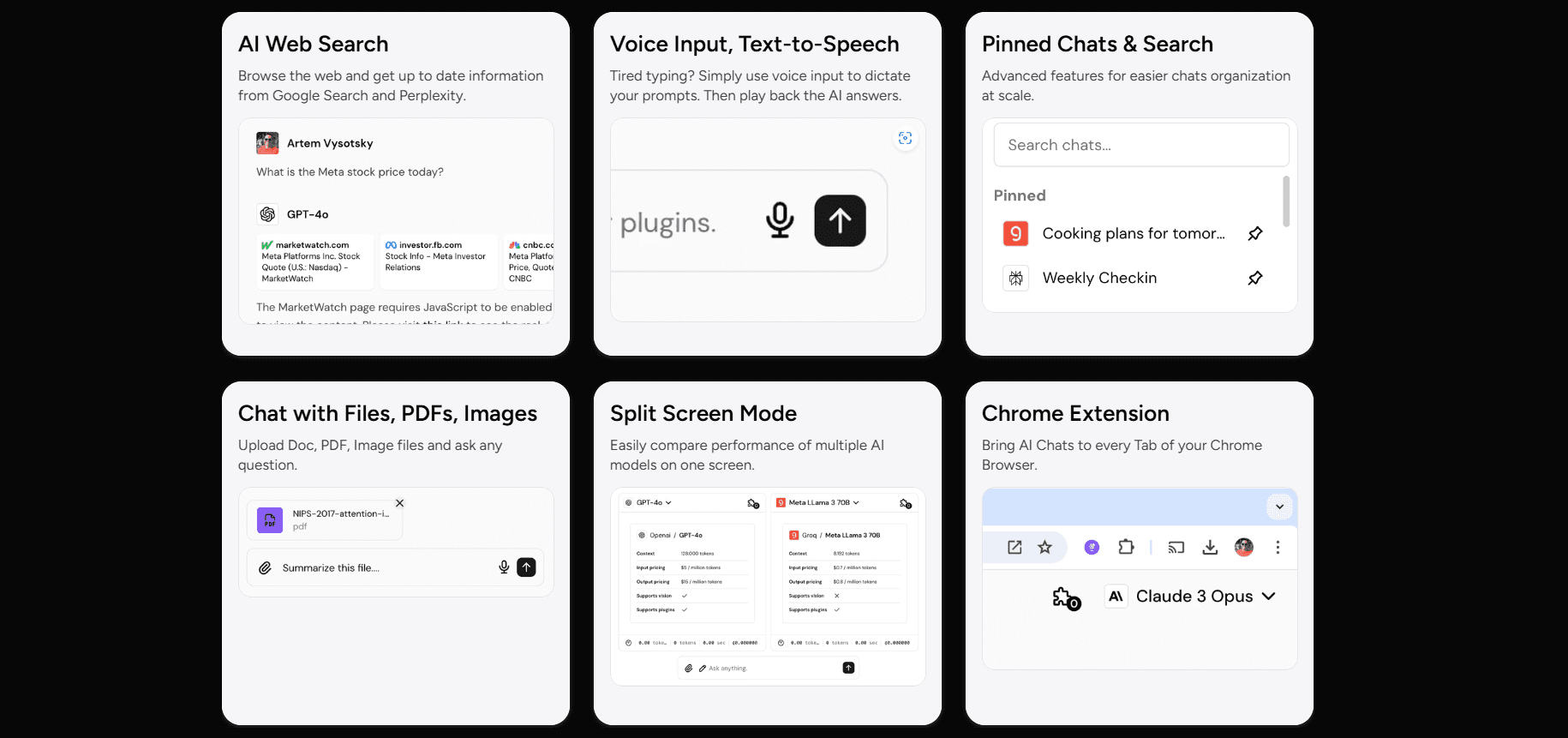

Both of the models are accessible to use and to try on Writingmate.ai, a complete all-in-one AI platforms with over 100 AI model including GPT4o, Claude 4 and 3.7 Sonnet, Llama 4 Maverick and Scout, and much more models that can do text, images, and code.

Feature | OpenAI o3 Mini | DeepSeek R1 |

Architecture | Dense Transformer (all ~200B parameters) | Mixture-of-Experts (MoE) transformer (~685B total, ~37B active) |

Context Length | Up to 200,000 tokens | 128,000 tokens |

Vision Support | Text-only (no image/vision) | Text-only (no image/vision) |

Key Strengths | Excels in STEM reasoning (math, science, coding) | Strong open-source reasoning and coding performance |

API Pricing | ~$1.10 (input) / $4.40 (output) per 1M tokens | Free basic chat; ~$0.14 (input, cache-hit) / $2.19 (output) per 1M tokens |

Availability | OpenAI API and ChatGPT (Plus/Pro/Enterprise) | Open-source weights (downloadable) plus free web interface; also accessible on multi-LLM platforms like Writingmate |

Release Date | January 2025 | April 2025 |

Developer Features | Supports function-calling, structured outputs, streaming | No native function-calling (community tools available) |

Here you can notice that o3-mini is OpenAI’s latest small model optimized for speed and STEM tasks, while DeepSeek R1 is a much larger open-source model that focused on powerful chain-of-thought reasoning. Below we are going to dive into most of their capabilities, important benchmarks, pricing, and typical use cases to help you decide which fits your exact needs in the best possible way.

OpenAI o3 Mini: Features and Capabilities

OpenAI’s o3-mini is a new “small reasoning” model that the most famous AI company released in early 2025. It inherits the o3 architecture. What makes it different from previous ones and to "flagship" models is that o3 mini is scaled down for cost and speed efficiency. According to OpenAI, o3-mini can give you exceptional STEM reasoning. That goes for math, science, and coding, all while using much less compute than larger models. In practice, this means o3-mini can answer complex physics questions or write code more cheaply than full GPT-4 models.

Here is also recommend to look at this OpenAI benchmark of all o-series models accuracy and you can see what is arguably the main difference between usual o3 mini and what users call o3-mini high, which is practically the same model with different parameters enabled.

Features of o3 Mini

Let's look through a couple of most useful capabilities of OpenAI o3 and o3 High.

STEM & Math Excellence:

OpenAI’s o3-mini excels at technical tasks. On difficult math and science problems, it matches GPT-4 (o1) performance and outperforms older models with high reasoning. It scored 83.6% on AIME math vs ~78% for earlier models and ~77% on PhD-level science that makes it well-suitable for for any STEM task, textbook, and competition-grade problems.

Top-Tier Coding Model?

o3-mini shines in programming. It scored up to 2073 Elo on Codeforces and leads OpenAI’s SWE (software engineering) benchmark at ~49% accuracy on tough coding tasks. With high reasoning, it writes clearer, more accurate code that is very decent for developers or if you are a competitive programmer.

Built for Developers:

o3-mini can fully support function calling, structured outputs (e.g., JSON), and streaming which all are typical for developer use. What is unique to this model: you can tune reasoning effort (low, medium, high) & also have a balance of speed / accuracy depending on any of your needs.

High Speed & Low Latency:

o3-mini is up to 5× faster than GPT-4, generating up to 180 tokens/second (vs 30/sec for DeepSeek R1). While startup latency is ~13 seconds for complex prompts, the overall response time is highly efficient.

Totally Massive 200K Token Context Window:

Work with entire codebases, books, or very long conversations with ease. You see, o3-mini supports 200,000 tokens, and that easily beats previous models like GPT-3.5 (8K) and many LLaMA models (128K max). By the way, we have put out a review of recent Llama Maverick, Scout and Behemoth models, and you can read it here.

Easy Access via OpenAI Chhatbot (or API)

o3-mini is not really that open-source, but is available via ChatGPT and OpenAI API. ChatGPT Plus users get 150 daily messages as of 2025; Pro ($200/mo) users get unlimited (!). Even free users can try it a bit (yes, you get o3 mini responses when you hit that “Reason” option), with rate limits. It’s ready to use and absolutely no prior setup required. For beginners, this makes o3 mini a more easy to use model and gives it some advantage.

DeepSeek R1: Features and Capabilities

DeepSeek R1 is a large open-source reasoning model from the Chinese startup DeepSeek. It’s built on a Mixture-of-Experts (MoE) architecture. R1’s total parameter count is massive (approximate 685 billion) and yet only about 37 billion of those “activate” on each token. This makes R1 a more efficient option per each query. R1 was trained through a kind of novel pipeline that made chain-of-thought reasoning a priority.

Features of Deepseek R1: Open-Source AI with High Performance

Let's now see some of the features that Deepseek provides to any of its users. I have been using R1 almost since the start and its prices make it a competitive option as of 2025.

Open Access:

DeepSeek R1 is fully open-source under the MIT license. You can run it locally or access it via the DeepSeek Chat platform. Model weights are available on Hugging Face.Hugging Face+2DeepSeek API Docs+2Hugging Face+2

Strong Reasoning & Coding and Chain-of-Thought Reasoning:

Achieves 97.3% on MATH-500 and 96.3% on Codeforces benchmarks, rivaling top proprietary models. Trained with reinforcement learning to provide detailed, step-by-step explanations, enhancing transparency in problem-solving.

Affordable API:

Deepseek still has competitive pricing at $0.14 per 1M input tokens (cache hit) and $2.19 per 1M output tokens. More details on DeepSeek's API pricing.DeepSeek+3DeepSeek API Docs+3DeepSeek API Docs+3.

Large Context Window and Community Support

Supports up to 128,000 tokens, allowing for processing of extensive documents and conversations. Active development and community engagement, with ongoing improvements and distilled versions available for various use cases.

Performance Benchmarks

Let's go straight to the most interesting part. As it often is in such comparisons, both models are extremely capable. Their benchmarks, though, differ slightly. Let us also compare it in three other sets of tasks.

Math & Science:

OpenAI’s internal evaluations show o3-mini (especially at high reasoning effort) leading on advanced math and science problems. For example, o3-mini-high achieved about 83.6% on AIME competition math questions & 77.0% on tough PhD-level science questions. DeepSeek R1 is good enough in these areas when it comes to benchmarks: it scored 90.8 on the MMLU exam (general knowledge tasks).

Those results are very close to GPT-4’s 91.8. Notably, on the MATH-500 math benchmark, DeepSeek R1 got 97.3% and that is actually beating earlier GPT-4’s score. In practice, both models can solve complex math and science problems, but o3-mini tends to give concise answers while R1 often spells out its reasoning.

Coding & Programming:

On coding benchmarks, o3-mini and DeepSeek R1 are often similar to each other. In Codeforces programming competitions, o3-mini (high) scored 2073 Elo, which is higher than any earlier OpenAI model. DeepSeek R1 scored 96.3% on coding tasks. This compares well to GPT-4. Both can generate functional code and debug.

In a recent side-by-side test, o3-mini’s code was often more structured and faster to write, thanks to its optimized design;

At the same time, DeepSeek R1 sometimes produced more verbose explanations in comments. On software engineering questions, o3-mini-high reached about 48.9% accuracy, slightly above DeepSeek R1’s performance.

Other Tasks:

On general reasoning (like logic puzzles), o3-mini generally outperforms older models. DeepSeek R1 also does very well, and has been noted to solve tasks that challenge GPT-4. On language understanding (MMLU), R1’s 90.8% puts it between GPT-3.5 and GPT-4. Overall, o3-mini tends to have a slight edge on reasoning consistency and speed. At the same moment, those R1’s open training sometimes leads to creative solutions.

In summary, for such raw performance: o3-mini typically “wins” many benchmarks by a small margin, especially with its high-reasoning setting. However, DeepSeek R1’s scores are very close, and on certain math or coding subtasks it even surpasses o3-mini. The practical takeaway is that both are great: o3-mini excels in structured STEM tasks, and R1 is right there on code and reasoning, with the bonus of being free & open-source.

o3 Mini vs DeepSeek R1: Price, Features & Performance Breakdown

When comparing o3 mini vs DeepSeek R1, both have decent to good token efficiency… but for different reasons.

o3-mini often uses fewer tokens due to its smarter architecture.

R1 is more affordable per token and delivers solid results, especially for long or repeated tasks.

That means that if your priority is token efficiency per dollar, R1 is more economical. But if accuracy per token is key, o3 mini highsetting can outperform in complex scenarios.

How Much Does o3 Cost per Generation?

OpenAI’s o3 mini AI model is priced at $1.10 per million input tokens and $4.40 per million output tokens via API. In contrast, DeepSeek R1 is far cheaper: about $0.14 for input (cache hit) and $2.19 for output per million tokens. On average, o3-mini costs 4–5x more per generation. That said, o3's faster and more efficient task handling may reduce token usage overall, balancing the higher rate.

There are three options and all in between, o3-mini allows low/medium/high reasoning effort. You can adjust for speed or accuracy when using API.

Speed: Generates up to 180 tokens/sec, significantly faster than DeepSeek R1’s ~31 tokens/sec.

Context Window: can work with up to 200K tokens, ideal for long documents or codebases.

Function Calling & Streaming: o3 supports structured outputs + real-time streaming.

Source: https://x.com/Yuchenj_UW/status/1886236439421296766

o3 Mini Query Limit

For ChatGPT users OpenAI has such limits:

Free users get limited access to o3-mini (via “Reasoning” mode).

Plus users ($20/mo): 150 messages per day.

Pro users ($200/mo): Unlimited access to o3-mini, including via API and enterprise options.

o3 Mini vs DeepSeek: Which One to Use?

So with such a detailed comparison between the two models, I think it is time for a quick conclusion with 2 short tips on what and when to choose. As far as my experience combined with benchmarks goes:

Choose o3 mini high vs DeepSeek if your task involves hard STEM problems, coding, or long reasoning chains.

Pick DeepSeek R1 if your goal is open-source flexibility, lower cost, or self-hosted deployment.

This is why, for most professional use cases, o3 mini suits well with faster responses, stronger reasoning, and developer-friendly features. But for more budget-conscious developers or open-source enthusiasts, DeepSeek R1 may be a model of excellent value.

Use Cases & Strengths: o3 Mini vs DeepSeek R1

Both o3-mini and DeepSeek R1 are built for reasoning, coding, and content creation. Still, they excel in slightly different ways. o3-mini is fast, API-ready, and highly structured, while R1 offers deep explanations and is as flexible as an open-source project usually is. In short, use o3-mini when you need top-tier STEM reasoning with an easy API/chat interface and don’t mind paying for tokens. Use DeepSeek R1 when you want a free/open solution or need lots of reasoning detail (chain-of-thought), and cost or model customization is a priority.

Developer Tools & Coding with Both Models

For software development, o3-mini is streamlined for speed and integration. DeepSeek R1 is slower, but more verbose and interpretable, very decent for learning or complex debugging.

o3-mini supports function calling, JSON output, and runs reliably in real-time apps.

DeepSeek R1 explains code in detail and performs strongly in coding benchmarks.

Education & Math Tutoring

Both models are strong in STEM subjects. They can solve various difficult problems with high accuracy. o3-mini is faster, while R1 often shows full reasoning paths.

o3-mini excels in physics, algebra, and competition-level math.

R1 scored higher than GPT-4 on some math tests and is great for learning step-by-step.

Writing & Content Creation

For writing tasks, the models differ in tone. o3-mini is focused and clean. R1 is more expressive and narrative-driven.

Use o3-mini for SEO writing, product docs, and structured text.

Use R1 for storytelling, idea generation, or creative content.

Research & Long-Form Input

Both support long context windows for o3-mini it seems to be at 200K tokens, DeepSeek R1 (128K), and all of that is useful for document analysis or multi-file processing.

o3-mini handles summaries, cross-document Q&A, and API-based data pulls.

R1 is strong at reviewing lengthy texts with full reasoning but lacks built-in API tools.

Productivity & Business Use

In business workflows, speed and structure are key. o3-mini offers smoother integrations, while R1 offers cost savings and more control.

o3-mini is ideal for internal tools, ChatGPT-based workflows, and fast output.

R1 is good for drafting or prototyping, and can be self-hosted for data security.

Multimodal Use

Neither model does support vision features or audio directly. Both are text-only but can be used in multimodal stacks by handling the text side of things. In openai chatbot, you have a kind of illusion of multimodality, but that is because ChatGPT integrates multiple top OpenAI models, in similar way that Writingmate.ai joins 100+ AI models in a single useful tool.

Accessing advanced AI models like OpenAI's o3-mini and DeepSeek R1 has become more streamlined with platforms such as Writingmate.ai. This platform offers a centralized interface to interact with over 200 AI models, eliminating the need for multiple subscriptions or complex setups.

Unified Access via Writingmate.ai

Writingmate.ai is an easy-to-use chatbot where you can seamlessly switch between models like o3-mini and DeepSeek R1, Claude 4 and 3.7 Sonnet, Gemini and Llama 4 Maverick or Scout, and many more. This tool also lets users to compare outputs with Comparison feature, it has great performance for daily tasks, and lets you work with multiple AI without that hassle of managing multiple different platforms. Here are some of Writingmate key features:

All-Model Switch: Effortlessly toggle between models such as o3-mini, DeepSeek R1, GPT-4o, Claude 3.7, or Mistral 8x22B.

Split-Screen Comparison: Interact with two AI models side-by-side to evaluate their responses in real-time.

Integrated Tools: Utilize functionalities like web search, file uploads, and image generation within the same platform.

Flexible Plans: Access a range of models with subscription plans starting at $9.99/month, offering up to 100 messages per day for Pro models and 50 for Ultimate models.

You can try it for free here.

Alternative Access Points

While Writingmate.ai is a consolidated platform with many models, o3-mini and DeepSeek R1 are also there for you through their default means.

OpenAI o3-mini: Available via the OpenAI API and integrated into ChatGPT for Plus and Pro subscribers.

DeepSeek R1: Accessible through DeepSeek's official website and downloadable from platforms like Hugging Face for self-hosting. Don't be afraid of the language, you can always switch to English right there.

For users seeking a comprehensive and flexible environment to explore and compare these models, Writingmate.ai is a useful all-in-one solution.

Happy experimenting, and be sure to check out the Writingmate blog for more AI model news, comparisons and detailed tutorials. Until the next article!

Artem

Written by

Artem Vysotsky

Ex-Staff Engineer at Meta. Building the technical foundation to make AI accessible to everyone.

Reviewed by

Sergey Vysotsky

Ex-Chief Editor / PM at Mosaic. Passionate about making AI accessible and affordable for everyone.