Introduction to AI Image Generation with Stable Diffusion

Stable Diffusion is a powerful AI model for generating images. It generates any kind of visuals from text descriptions. Such descriptions are called "prompts." Imagine typing "a cat wearing a top hat in a spaceship." Then, the AI creates a picture just like that! And the more precise a prompt is - the more the image is closer to what you have in mind. At least, usually.

But what if you want to create images in a specific style or with unique elements? That's where training your own Stable Diffusion model comes in. This article guides you through the process step-by-step.

Understanding the Basics: How Stable Diffusion Learns

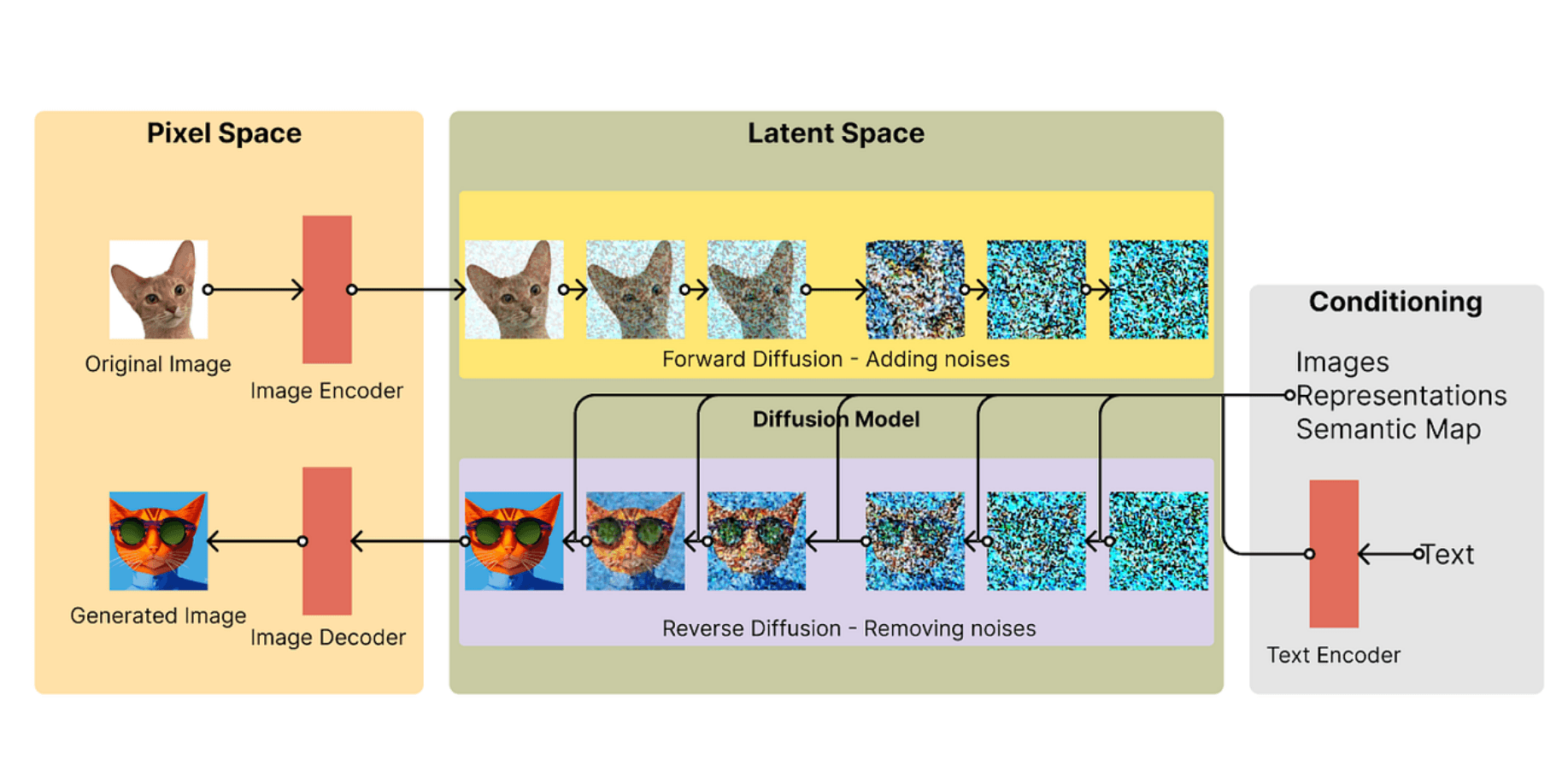

Before diving into the how-to, let's understand how Stable Diffusion learns. There are 'Pixel Space' and 'Latent Space' to start with. What's inside?

Datasets: Stable Diffusion is trained on massive datasets of images and their text descriptions. This data teaches the model the relationship between words and visual elements.

Diffusion Process: The model learns by gradually adding noise to images and then trying to reverse the process. This helps it understand the underlying structure of images.

Text Encoder: When you give the model a text prompt, it uses an encoder to convert the words into a math representation. And only then, it does generate actual images.

Image Decoder: The model then uses an image decoder to turn this mathematical representation into an image.

Steps to Train Your Stable Diffusion Model

1. Gathering Your Training Data

Gathering images and descriptions is the crucial first step in teaching your model what to learn from. Imagine you're curating an entire art exhibition! You need to pick the paintings that will shape the audience's understanding of the artist's vision. In a similar way, the images and descriptions you choose will form the foundation of your model's knowledge.

When selecting images, consider the diversity of the dataset. You want your model to learn from a variety of examples, just as a child learns to recognize dogs by seeing different breeds, sizes, and colors. Using diverse images will help your model. It will generalize better and be more accurate.

Descriptions are equally important. These should be concise and clear, and provide relevant information about the image. Think of them as captions that help the model understand the context and significance of the image. For instance. A description of a photo of the Eiffel Tower might include its location, the time of day, and the season.

By carefully gathering and curating your images and descriptions, you'll be well on your way. You'll create a strong model that can learn and grow from its training data. So, some important technical points to consider:

Image Quality: Use high-quality images that are clear and well-lit.

Image Variety: Include a diverse range of images representing the style or elements you want your model to generate.

Descriptive Captions: Write clear and detailed captions for each image, using keywords that describe the content, style, and mood.

Dataset Size: A larger dataset generally leads to better results. Aim for at least a few hundred images to start.

2. Setting Up Your Training Environment

Training AI models requires specific software and hardware. You have two main options:

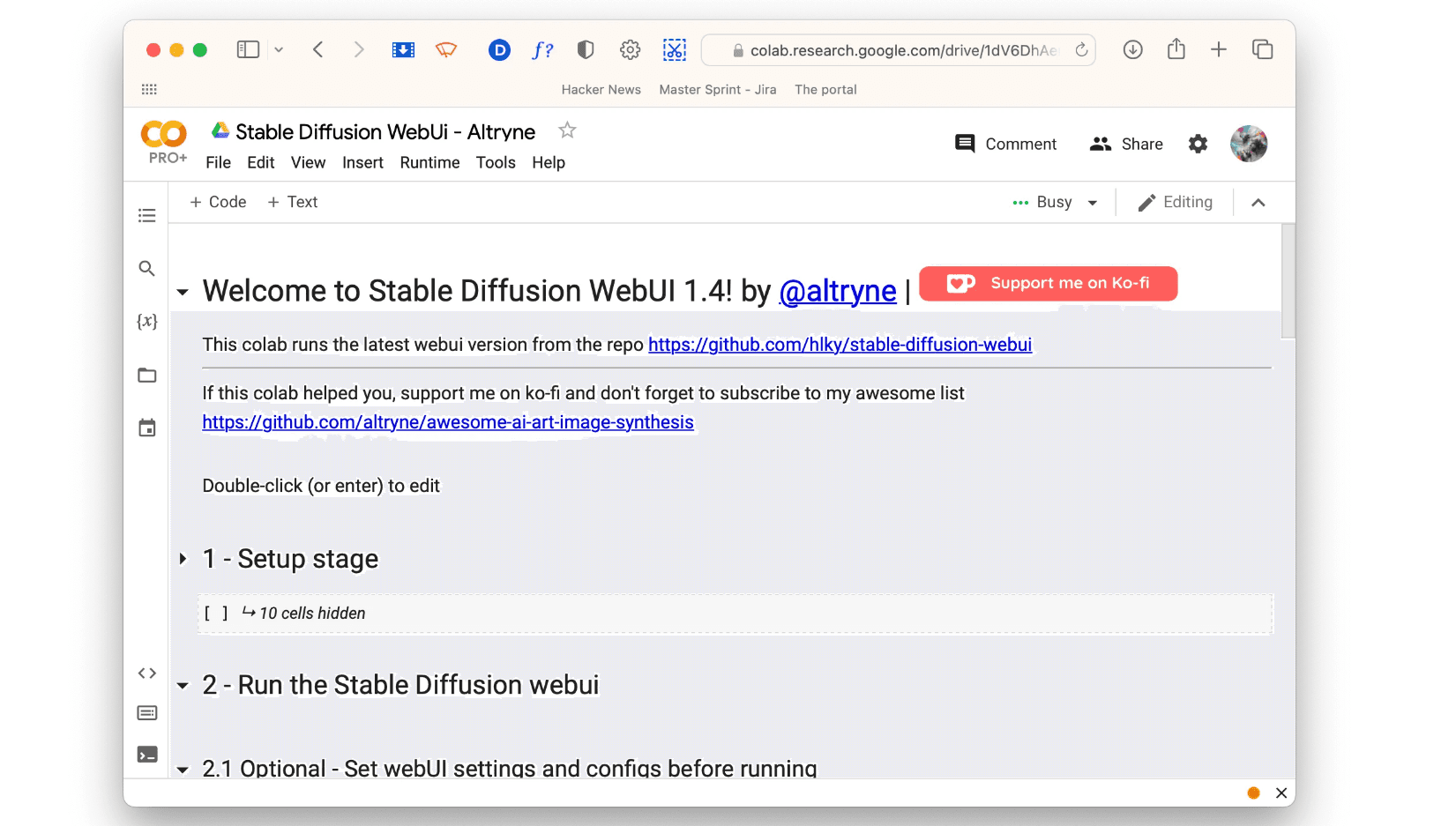

Cloud Computing: Services like Google Colab and Amazon SageMaker offer cloud-based environments. They have the needed computing power, making it easier to get started.

Local Machine: If you have a powerful computer, you can set up a local environment using frameworks like PyTorch or TensorFlow. This option gives you more control but requires more technical expertise.

Google Colab is probably the easiest way to run SD on your own. Why? Because it is a sort of Docs for code and runs on Google’s servers. It’s possible to use Colab for free and there truly are free options (with stability.ai notebook, for example), but I’d recommend upgrading to Pro or Pro+ if you’re going to use SD frequently. That way you get more powerful GPUs and longer session times.

There is an entire tutorial on using Stable Diffusion on Colab (but similar stages apply to SageMaker). Link: https://www.unlimiteddreamco.xyz/articles/how-to-run-stable-diffusion-in-google-colab/

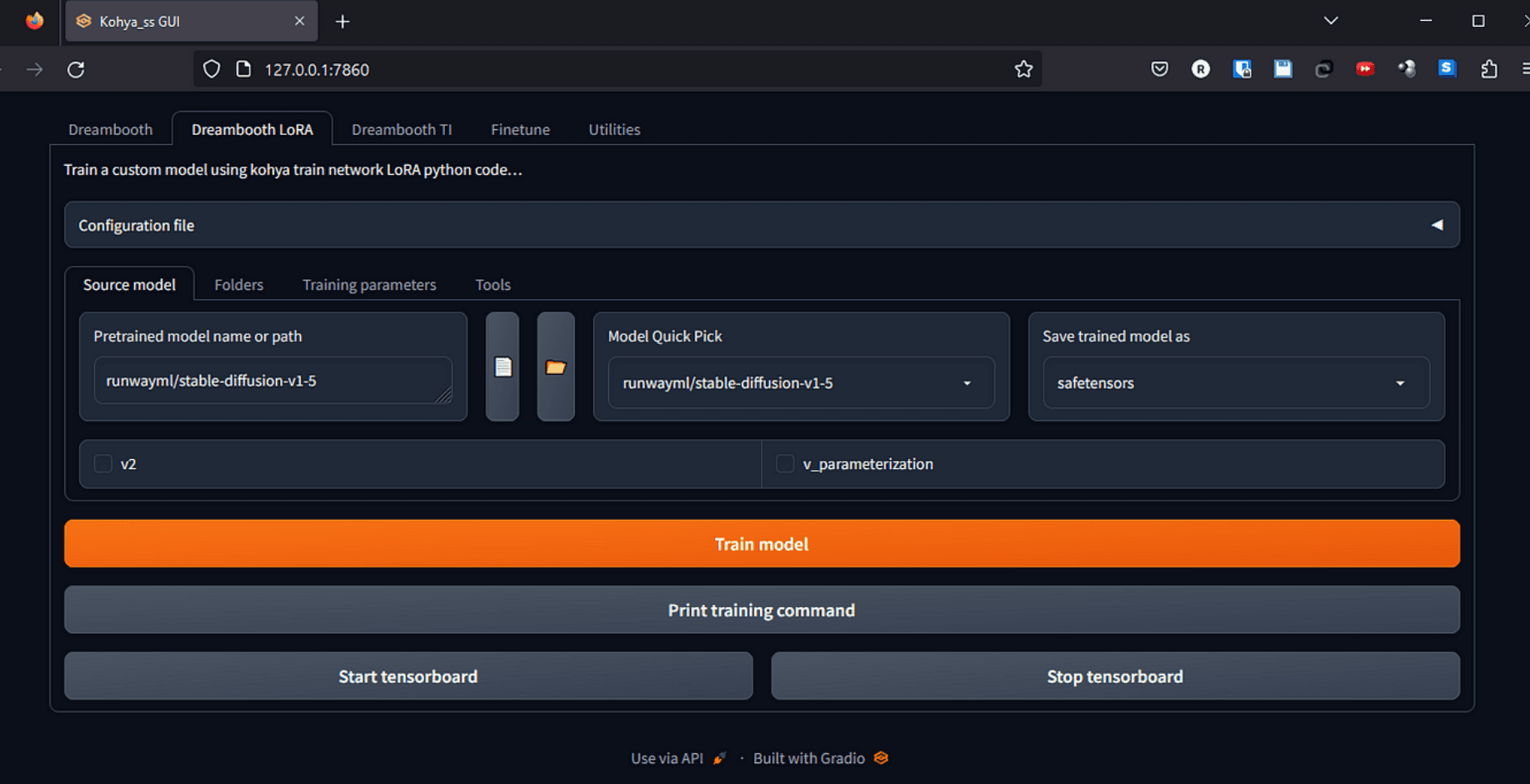

3. Choosing a Pre-trained Model or Choosing LoRa

When it comes to training a Stable Diffusion model… you don't really have to start from the scratch. Instead, you can take advantage of pre-trained models and fine-tune them with your own dataset. This approach saves you a significant amount of time and resources, allowing you to focus on other important aspects of your project.

Imagine trying to build a house from the ground up. It would take a lot of effort and resources to create the foundation, walls, and roof. But what if you had access to pre-built modules that you could simply assemble to create your dream home? That's essentially what pre-trained models offer. Models can vary from small and very specific to larger and more universal.

Many popular pre-trained Stable Diffusion models are available. Each has its own strengths and weaknesses. For instance, Stable Diffusion v1.5 is a versatile model that can handle a wide range of image generation tasks. It's like a Swiss Army knife - reliable and capable of adapting to different situations. Waifu Diffusion is a specialist toolbox. It is good at specifically making anime-style characters. There are similar tools for pixar style animations, midjourney-like images, photorealistic paintings and more. It's for creators who want to make high-quality anime art. They want to do so without spending time and resources to train a model from scratch. Dreamlike Diffusion creates dreamlike and surreal imagery. It can evoke emotions and spark imagination.

You can find these pre-trained models on platforms like Hugging Face. They are along with others. This online hub offers a vast collection of pre-trained models that you can easily integrate into your project, saving you time and effort. By using these models, you can speed up your development process. You can then focus on perfecting your craft.

4. Data Preprocessing and Augmentation

Before training, you need to prepare your dataset:

Resizing: Make sure all images are the same size, typically a square resolution like 512x512 pixels.

Normalization: Adjust the image data to a standard scale, which helps the model learn more effectively.

Augmentation: You can artificially increase the size and variety of your dataset by applying random transformations like flips, rotations, and color adjustments.

Ground Truth refers to the actual data used for training, which serves as a benchmark for evaluating the model's performance. It is the original and unaltered data that the model tries to learn from during training. By including ground truth data, the model retains a reference point. That is ensuring the new training does not diverge significantly from the established baseline, thereby maintaining the model's accuracy and relevance.

Boomerang is a method designed to enhance local sampling on image manifolds using diffusion models. It involves partially reversing the diffusion process to generate samples that are close to a given input image. This technique ensures that the generated images remain similar to the original, thus preserving the integrity of the sample while allowing for efficient computation. Boomerang is particularly useful for tasks requiring high-fidelity image generation without extensive computational resources.

5. Training Your Model

Now you're ready to start the training process!

Hyperparameters: These are settings that control how the model learns, like the learning rate and batch size. Experimenting with different hyperparameters can impact the final result.

Training Loop: The training process involves feeding your dataset to the model multiple times, gradually adjusting its parameters to minimize errors.

Monitoring Progress: Keep an eye on the training progress by tracking metrics like loss and generating sample images to see how the model is learning.

Here is an extensive video tutorial on the whole process of training a model in Stable Diffusion (I found it useful when I tried to train my first one):

6. Evaluating and Fine-tuning Your Model

Once the training is complete, evaluate its performance:

Generate Sample Images: Test your model by giving it prompts similar to the ones in your training dataset.

Adjust Hyperparameters: If you're not happy with the results, you can fine-tune the model. Do this by adjusting hyperparameters or training for more epochs.

Iterative Process: Training AI models is often iterative. You may need to go back and adjust your dataset, hyperparameters, or training process to achieve the desired outcome.

Tools and Resources for Training Stable Diffusion

Hugging Face Diffusers: A library that simplifies working with Stable Diffusion and other diffusion models, providing tools for training, fine-tuning, and inference. https://huggingface.co/docs/diffusers/index

Google Colab: A free cloud-based platform that offers access to GPUs, making it easier to train AI models. https://colab.research.google.com/

Amazon SageMaker: A cloud machine learning platform that provides tools and resources for training and deploying AI models. https://aws.amazon.com/sagemaker/

Civit.ai has hundreds of pre-existing models, LoRas, sets of data and useful information. Link: https://civitai.com/

Unlocking the Potential of AI with Writingmate

Ready to explore the world of AI image generation beyond Stable Diffusion? Writingmate offers a full platform to experiment with many cutting-edge AI models. It includes:

DALL-E: Another powerful image generation model known for its ability to create realistic and imaginative visuals.

Stable Diffusion Integration.

GPT-4 and GPT-4 Mini are the latest versions of the GPT language models. They can generate human-quality text and help with writing, translation, code generation, and more.

Claude, Mistral, and LLaMa are some other language models avaliable. Each has its strengths and capabilities. They can help you find the perfect fit for your projects.

Writingmate empowers you to harness the power of AI in one convenient web app. Start creating with the latest and greatest AI technology today!

Try it for free: https://writingmate.ai

Thank you for reading, I hope this tutorial was helpful and made things clear. For detailed articles on AI, visit our blog writingmate.ai/blog that we make with a love of technology, people and their needs.

Written by

Artem Vysotsky

Ex-Staff Engineer at Meta. Building the technical foundation to make AI accessible to everyone.

Reviewed by

Sergey Vysotsky

Ex-Chief Editor / PM at Mosaic. Passionate about making AI accessible and affordable for everyone.